⚡️ TOON: The New Format That Could Challenge JSON in the LLM Era

Discover TOON (Token-Oriented Object Notation), a compact data format built to optimize structured data for LLM consumption, reducing token usage and costs.

When working with large language models (LLMs), every token matters. Tokens directly affect cost, latency, and how much useful context you can fit into a prompt.

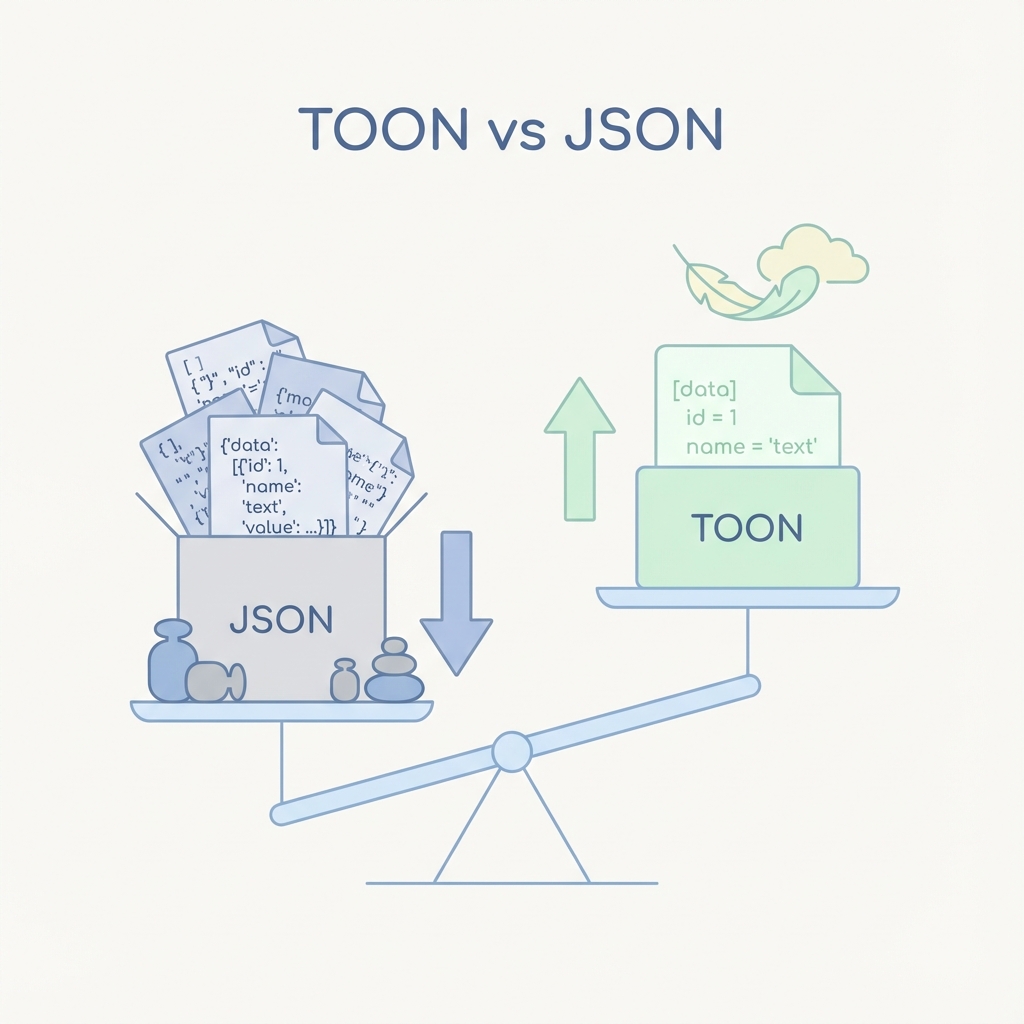

JSON, while excellent for traditional APIs and data exchange, was never designed for a token-based pricing model. Brackets, commas, quotes, and repeated field names add up quickly.

That’s where TOON (Token-Oriented Object Notation) comes in — a data format built specifically to optimize structured data for LLM consumption.

What is TOON?

TOON (Token-Oriented Object Notation) is a compact, human-readable data serialization format that represents the same information as JSON but with significantly fewer tokens.

It keeps the conceptual model of JSON (objects, arrays, fields) while aggressively removing syntactic overhead that is irrelevant for LLM reasoning.

In short: same data, fewer tokens, lower cost.

JSON vs TOON (Quick Example)

JSON example:

{

"users": [

{ "id": 1, "name": "Alice", "role": "admin" },

{ "id": 2, "name": "Bob", "role": "user" }

]

}

TOON example:

users[2]{id,name,role}:

1,Alice,admin

2,Bob,user

What changed?

- Field names are declared once.

- There are no quotes, braces, or redundant punctuation.

- The data is represented in a compact, tabular form.

Why This Matters for LLMs

LLMs tokenize text aggressively. Every structural character counts.

JSON wastes tokens on:

- Repeated keys for each object

- Quotes around every string

- Brackets, commas, and colons everywhere

TOON removes most of this noise, achieving 30–60% token reduction in many real-world payloads, especially when working with lists of records, logs, tables, and structured datasets with uniform fields.

The direct benefits are clear:

- Lower API costs

- More room for instructions and reasoning

- Potentially lower latency

- Better scalability for agent pipelines

Where TOON Shines (and Where It Doesn’t)

TOON works especially well when:

- You send large arrays of uniform objects

- You feed structured data to LLMs for analysis

- You need to optimize prompt payload size

- You compress agent memory or context

However, it has limitations:

- It does not replace JSON for traditional APIs

- Benefits decrease with highly irregular or deeply nested data

- LLMs must be explicitly instructed on how to read the format

- The ecosystem is still early compared to JSON

The key insight: TOON is an optimization layer for LLM workflows, not a universal data format.

Tooling and Ecosystem

TOON is open source and already usable today.

The official repository is available at: github.com/johansthlm/toon

Available tools include:

- JSON ⇄ TOON converters

- CLI tools

- npm packages

- JavaScript and Python SDKs

- Token comparison utilities

This makes it easy to experiment, benchmark, and adopt TOON incrementally.

A Real-World Use Case

Imagine sending a list of users, permissions, or transactions to an LLM for business rule generation, validation, analytics, or decision support.

With JSON, a large portion of tokens is structural overhead. With TOON, almost every token carries actual information.

At scale, this can translate into thousands of tokens saved per request, meaningful monthly cost reductions, and more reliable model outputs thanks to increased effective context.

Strategic Takeaway

TOON isn’t trying to replace JSON — it’s solving a different problem.

As LLM-driven systems grow:

- Token efficiency becomes an architectural concern

- Prompt payloads turn into data pipelines

- Cost optimization becomes a product decision

If you’re sending large structured payloads to LLMs, you’re likely paying for commas you don’t need.

TOON offers a practical and measurable way to fix that — today.

About the Author

I am Guillermo Miranda, a Salesforce Consultant specializing in defining and developing scalable solutions for businesses.

Need help with Salesforce?

I help businesses design and build scalable Salesforce solutions.